Everyone talks about better models, faster GPUs, and bigger foundation architectures.

But real world robotics doesn’t fail at inference. It fails upstream at data design.

Unlike traditional computer vision, robotics systems don’t learn from static images. They learn from continuous, multi-modal streams such as video, LiDAR point clouds, inertial sensors, GPS, force feedback, and more, all synchronized in time and space.

That creates three structural challenges most teams underestimate.

- First, temporal consistency matters more than accuracy at a single frame. A bounding box that looks right in one frame but drifts across a sequence can break motion prediction, trajectory planning, or object permanence.

- Second, sensor fusion is fragile. If LiDAR, camera, and IMU data are not aligned precisely, the robot does not just misclassify. It misinterprets reality.

- Third, edge cases are not optional. Robotics systems fail in the long tail. Odd lighting, partial occlusion, and unexpected human behavior are rare, expensive to label, and disproportionately important.

Labeling for robotics is not annotation. It is real world modeling. Poor data pipelines do not just slow iteration. They introduce safety risk.

Takeaway.

If you are building robotics or autonomy products and your data strategy looks like computer vision labeling at scale, you are already behind. Competitive advantage will come from teams that treat data as infrastructure, not an afterthought.

Why this article?

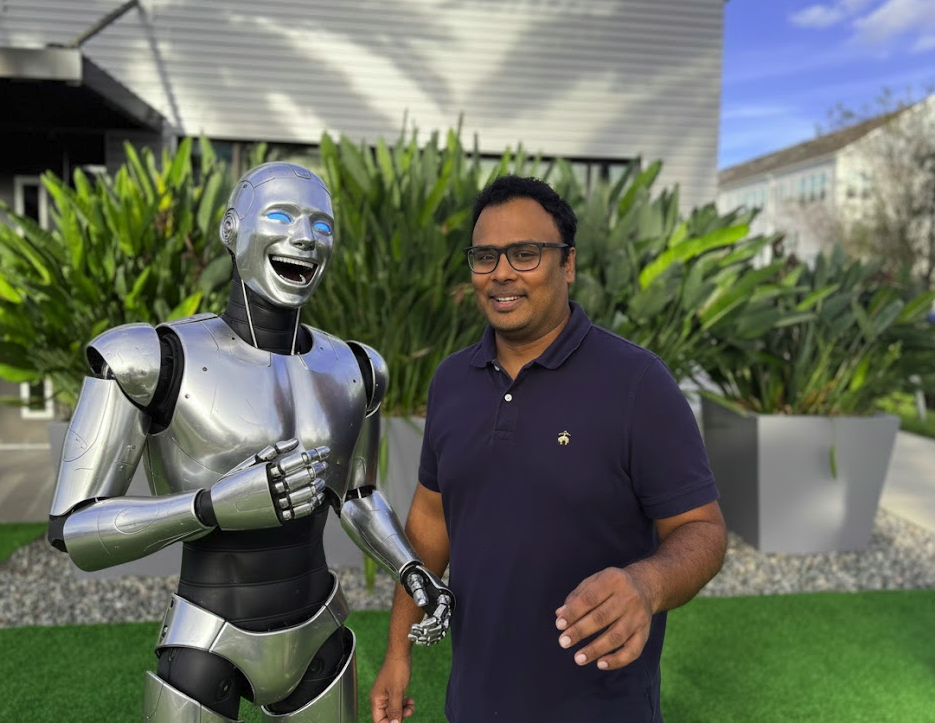

We've built AI and we've built hardware. We've been client zero for ourselves with amazing data collection and annotation capabilities. We're now expanding this capability to help others. We will continue to prioritize and help our thrift customers. The goal here is to also also to help our thrift customers with their missions (by helping their team members find jobs within the world of AI).

#ThoughtLeadership #Robotics #AIInfrastructure #MachineLearning #Autonomy